Recursive n-dimensional scalar field interpolation

Background

Lets say you have a smooth scalar function of any number of variables \(f:\mathbb{R}^n\mapsto\mathbb{R}^1\). Let’s assume that the function is computationally expensive to evaluate, but you need to do this at run-time, possibly a lot. Let’s also assume that you can define the maximum and minimum range of values which you are interested in. Let’s also assume that the function is smooth, and you’re tolerant of (or even prefer) the results to be smoothed over.

One possible solution is to sample values of the function over a regular grid, and perform some sort of interpolation between the values. So we’ll define a different function \(g:\mathbb{R}^n\mapsto\mathbb{R}^1,,g\approx f\). Depending on the desired smoothness of the approximation different interpolation functions are feasible, but not all of these generalise to any dimension. One method which does, however, is the Catmull-Rom Spline (co-invented by the CG superhero Ed Catmull) which can be evaluated recursively on grid edges and exhibits \(C^2\) continuity.

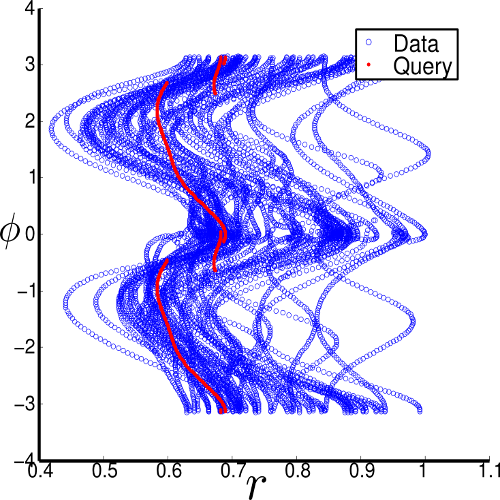

I’ll explain how this works with the help of this figure, representing a 2D scalar field:

Applications

You might be wondering when you might you want to use an application like this? I developed it in the context of a sparse, high dimensional Moving Least Squares projection: if you are projecting onto a point cloud that is irregular, you need an adaptive, variable radius function in order to ensure that you have sufficient local points on which to project. As it’s expensive to compute local neighbours at run time (especially in, say, 66 dimensions) it’s probably a good idea to define an approximating smooth function which, given any point in space, returns a radius that will probably contain a desired number of point samples.

Implementation

There are a number of features used in this implementation, which I’m going to unpick from the implementation and discuss separately.

Passing around functions

Fundamental to this process is the ability to evaluate a generic function at a point. It would be very convenient for us to be able to pass said function around as a generic function pointer. For a simple generic function signature, I’ll use a function that accepts an array of doubles, and returns a double. This can be stated using the std::function method:

std::function<double (double*)> _func;

Note that the type notation is C notation for a function that accepts a pointer to a double, and returns a double. This makes _func a generic function that can be passed as a parameter - particularly convenient if we’re allowing the use of arbitrary input functions. Of course, the return type and array size could be specified as template parameters, but for interaction with existing functions (e.g. libnoise) I’ve kept it simple.

Templates and C++11

I do, however, use templates for the actual ScalarField class. In an early implementation of this structure I just used dynamic arrays of doubles to store the data - but this gives us the overhead of dynamic allocation and deallocation, along with the risks associated with non-contiguous memory. C++11 introduces the std::array, which is essentially an old skool C static array, but with all the handy goodness of the STL.

This is from the top of the ScalarField class:

template <uint DIM>

class ScalarField {

public:

/// Unsigned int array

typedef std::array<uint, DIM> uintArray;

/// Double array

typedef std::array<double, DIM> doubleArray;

The template parameter is evaluated at compile time, generating a template class for the desired dimension. Whenever I create an object of type doubleArray in the code, this is done statically.

Note that I could have given a template parameter for a real type and an integer type, but that will add some unnecessary complexity for now.

Recursive Algorithm

The core of the algorithm is the construction of the grid and the recursive evaluation of the query point. Fortunately these are reasonably similar. Lets have a look at the init routine:

/**

* @brief ScalarField::initialise Inits the scalar field

* @param _func A boost-ified function declaration which returns the scalar value

* @param _minValue The minimum values of our given field.

* @param _maxValue The maximum values of our given field.

* @param _resolution The resolution of the field in each dimension.

*/

template<uint DIM>

void ScalarField<DIM>::init(std::function<double (double*)> _func,

const doubleArray &_minValue,

const doubleArray &_maxValue,

sconst uintArray& _resolution) {

// Check we've not been here before already

if (m_isInit) cleanup();

// Precompute the partition size in each case

// Now we can allocate some memory for our data

// Now for each value in the field we can use the provided function to derive the scalar value

// this is done recursively (could easily be done in parallel

doubleArray pos; uintArray idx;

initRecurse(_func, 0u, idx, pos);

// Set this to initialised

m_isInit = true;

}

Not very much excitement there: we set up the memory and ready ourselves for running the recursive function. The initRecurse function is obviously where the action is - so I’ll break it down into stages:

/**

* @brief ScalarField::initRecurse Recursively initialise the scalar field along each dimension.

* @param _func The boostified function to execute to determine the scalar value

* @param dim The current dimension (start this at 0)

* @param idx The current index to evaluate (empty when you start)

* @param pos The position to evaluate (empty when you start)

*/

template<uint DIM>

void ScalarField<DIM>::initRecurse(std::function<double (double*)> _func,

const uint &dim,

uintArray &idx,

doubleArray &pos) {

The prototype reveals a lot about the functionality of the function. The first parameter is the function that we want to call at the grid points. The second parameter is the current dimension. You’ll notice from the code above that this starts at 0 - each time the function is called, the dimension number will tick up until it gets to the actual dimension, which is our recursion termination criteria. Index and the position values fill up after the recursion unrolls.

// At the end of the recursion chain we can calculate the value using the function

if (dim >= DIM) {

// First determine the index in the data

...

// Now set the value of the data in the right place to the result of the function

m_data[dataIdx] = _func(&pos[0]);

return;

}

At the end of the recursion chain, we evaluate the given function at the position. This isn’t straightforward, as I’ve stored all the data in a big flat 1D array. However I’ve removed the details for simplicity.

If the function didn’t return, we know that we’re not at the end of the chain so we recursively evaluate across each grid dimension:

// If we didn't get to the end of the chain, we run another iteration

for (i = 0 ; i < m_resolution[dim]; ++i) {

idx[dim] = i;

pos[dim] = m_minValue[dim] + i * m_partSize[dim];

initRecurse(_func, dim+1, idx, pos);

}

In this way, each node in each dimension is initialised with our input function.

The evaluation function is a bit more tricky:

/**

* @brief ScalarField::eval Evaluate the scalar value at the specified point.

* @param pt The position at which to evaluate the scalar field

* @return

*/

template<uint DIM>

double ScalarField<DIM>::eval(const doubleArray& pt) const {

if (!m_isInit) return 0.0;

// Determine the index of the bottom corner of the evaluation grid (if outside, extrapolation

// may be used, so it's capped at the bounds of the grid)

...

// Deduce the index of the points from the min, max grid values and total resolution

...

// Evaluate the position recursively over all dimensions

double ret_val = evalRecurse(0, idx, currentIdx, x);

// Clear memory and return

return ret_val;

}

This is largely the same as the init function above, except that you will need to calculate the bottom corner index of the grid cell in which the query point lives (which is messy, and hidden away).

The header for evalRecurse is more or less the same as initRecurse so I won’t repeat myself. The big difference, of course, is that this function actually returns a value. Here’s the termination condition:

// The termination condition - just return the scalar value at the specified position

if (dim >= DIM) {

// First determine the index in the data

...

// Just return the data value at this position!

return m_data[dataIdx];

}

This just returns the value at the grid point - this makes more sense in the context of the general condition below:

else {

// Evaluate the catmull rom spline recursively by combining the current indices

// along all dimensions

double v0, v1, v2, v3;

currentIdx[dim] = idx[dim] + 0; v0 = evalRecurse(dim+1, idx, currentIdx, x);

currentIdx[dim] = idx[dim] + 1; v1 = evalRecurse(dim+1, idx, currentIdx, x);

currentIdx[dim] = idx[dim] + 2; v2 = evalRecurse(dim+1, idx, currentIdx, x);

currentIdx[dim] = idx[dim] + 3; v3 = evalRecurse(dim+1, idx, currentIdx, x);

return catmullRomSpline(x[dim], v0, v1, v2, v3);

}

So this function spawns 4 different evalRecurses for this thread, each one potentially spawning another 4 ad nausium. The results of each thread re interpolated using our magical catmullRomSpline function (which is really easy - check the code).

Conclusions

This is a nice example of an bit of code that is useful for teaching and research: there are a couple of nice C++11, C++ and C principles at play which greatly simplify the implementation and improve performance. The research behind it pretty nifty and broadly applicable (I would think).

You may have observed that you could use this approach for general dimensional mapping, i.e. $f:\mathbb{R}^n\mapsto\mathbb{R}^m$, assuming that you create m different ScalarFields. Vector Field intepolation might be more difficult as they will require some recombination function (e.g. normalisation), but not impossible.

Parallelism is an obviously missing thing here: in very high dimensional data sets this could prove to be particularly useful - it is essentially a scan-reduce function. Send me an email if you want this and I can check this out.

Downloads

The source code is hosted on GitHub. Clone it from the repository using the following command from your console:

git clone https://github.com/rsouthern/Examples