Learning WebGPU Part 2 First Triangle

Hello Triangle

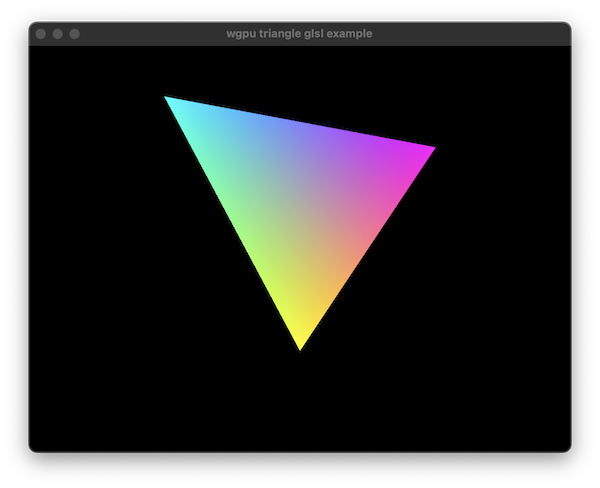

The hello world of graphics programming is the “Hello Triangle” program. This is a simple program that draws a triangle to the screen.

The wgpu-py library provided a few simple examples, in particular triangle.py and triangle_glsl.py. I chose the triangle_glsl.py example as a starting point as I am familiar with the GLSL shading language and it meant I didn’t need to look at the rust based WGSL shading language immediately.

The Code

I will now dissect the code to explain what each part does and how I will begin to use this to develop my own code and examples.

Shaders

The file start with the standard vertex and fragment shaders. These are written in GLSL and are used to define the vertex and fragment processing for the triangle.

#version 450 core

layout(location = 0) out vec4 color;

void main()

{

vec2 positions[3] = vec2[3](

vec2(0.0, -0.5),

vec2(0.5, 0.5),

vec2(-0.5, 0.75)

);

vec3 colors[3] = vec3[3]( // srgb colors

vec3(1.0, 1.0, 0.0),

vec3(1.0, 0.0, 1.0),

vec3(0.0, 1.0, 1.0)

);

int index = int(gl_VertexID);

gl_Position = vec4(positions[index], 0.0, 1.0);

color = vec4(colors[index], 1.0);

}

What is interesting about this shader is there are no uniforms or attributes. The vertex shader simply defines the positions of the vertices and the fragment shader defines the colours. The vertex shader uses the gl_VertexID to select the position and colour from the arrays.

This means that the rest of the code is not generating any form of data to pass to the shaders, so it is going to be very bare bones.

#version 450 core

out vec4 FragColor;

layout(location = 0) in vec4 color;

void main()

{

vec3 physical_color = pow(color.rgb, vec3(2.2)); // gamma correct

FragColor = vec4(physical_color, color.a);

}

The output shader is just taking the colour from the vertex shader and applying a gamma correction to it very standard for glsl.

__main__

if __name__ == "__main__":

from wgpu.gui.auto import WgpuCanvas, run

canvas = WgpuCanvas(size=(640, 480), title="wgpu triangle glsl example")

draw_frame = setup_drawing_sync(canvas)

canvas.request_draw(draw_frame)

run()

As you can see the main function is very simple, but is does hide a lot of “library” code which I will delve into in the next post.

The core to this is that the WgpCanvas class will under the hood generate a window, texture buffers to render to and the ability to blit this to the screen.

The user just has to setup the drawing pipleline and then request a draw.

What is a pipeline?

In most modern graphics APIs the rendering pipeline is a series of stages that the data passes through to be rendered to the screen. This must be specified by the user (unlike with the OpenGL state machine) and is a very powerful feature of modern APIs however to the uninitiated it can be a little daunting.

In OpenGL (and older DirectX) we typically have something like which is managed by the API

- Input Assembly (IA)

- Reads vertex data from buffers.

- Organizes vertices into primitives (triangles, lines, etc.).

- Vertex Shader

- Processes individual vertices.

- Applies transformations (e.g., Model-View-Projection).

- Tessellation (Optional, for advanced surfaces)

- Subdivides geometry for finer detail.

- Uses control shaders to dynamically refine models.

- Geometry Shader (Optional)

- Can modify, create, or discard primitives on the fly.

- Useful for procedural effects like billboarding.

- Rasterization

- Converts vector primitives into fragments (potential pixels).

- Clips and culls primitives outside the view.

- Fragment (Pixel) Shader

- Determines final color, depth, and other per-pixel properties.

- Handles lighting, texturing, and shading effects.

- Output Merging & Framebuffer

- Performs depth testing, blending, and writes final pixel values to the framebuffer.

WebGPU and Vulkan are more explicit in that the user must define the pipeline and the stages that the data will pass through. This is a more complex but also more flexible way of working.

setup_drawing_sync

def setup_drawing_sync(canvas, power_preference="high-performance", limits=None):

"""Regular function to set up a viz on the given canvas."""

adapter = wgpu.gpu.request_adapter_sync(power_preference=power_preference)

device = adapter.request_device_sync(required_limits=limits)

render_pipeline = get_render_pipeline(canvas, device)

return get_draw_function(canvas, device, render_pipeline)

This function grabs some of the internals fo the wgpu library to setup the drawing pipeline. It requests an adapter and a device and then creates a render pipeline.

The power_preference is a hint to the system to use the high-performance (discreteGPU) GPU if available. The limits define the maximum capabilities of the GPU, such as the number of bind groups, textures, or buffer sizes. These limits vary depending on the hardware and driver support.

At this stage we are not really worried about them so will set to None.

get_render_pipeline

This function is quite complex as it covers a number of the stages in the development of the pipeline.

- create the shaders

- create_pipeline_layout

- associate the canvas with the window / canvas

- create the render pipeline

1. Create the shaders

WebGPU has a build in shader compiler that can compile GLSL to WGSL. This is done using the

create_shader_module function.

create_shader_module(**parameters)

Create a GPUShaderModule object from shader source. The primary shader language is WGSL, though SpirV is also supported, as well as GLSL (experimental).

Parameters:

label(str) – A human-readable label. Optional.code(str | bytes) – The shader code, as WGSL, GLSL or SpirV. For GLSL code, the label must be given and contain the word ‘comp’, ‘vert’ or ‘frag’. For SpirV the code must be bytes.compilation_hints– currently unused.

Which returns a

wgpu.ShaderModule object.

In this demo the shaders are standard python strings so are just passed to the function.

2. create_pipeline_layout

The next step is to create a pipeline layout. This is a way to define the inputs and outputs of the pipeline, we use the

create_pipeline_layout function to do this.

create_pipeline_layout(**parameters)

Create a GPUPipelineLayout object, which can be used in create_render_pipeline() or create_compute_pipeline().

Parameters:

label(str) – A human-readable label. Optional.bind_group_layouts(list) – A list of GPUBindGroupLayout objects.

# No bind group and layout, we should not create empty ones.

pipeline_layout = device.create_pipeline_layout(bind_group_layouts=[])

3. Associate the canvas with the window / canvas

The next step is to associate the canvas with the window / canvas. This is all derived from the GPUCanvasContext object.

To quote the documents

The canvas-context plays a crucial role in connecting the wgpu API to the GUI layer, in a way that allows the GUI to be agnostic about wgpu. It combines (and checks) the user’s preferences with the capabilities and preferences of the canvas.

For now we just need to create the context and configure it with the device and the format of the render texture. This is a core part of the api that I am going to override later and use my own windowing system.

For now this code sets everything we need up and in particular gives us access to the dimensions of the screen we are rendering to.

present_context = canvas.get_context("wgpu")

render_texture_format = present_context.get_preferred_format(device.adapter)

present_context.configure(device=device, format=render_texture_format)

4. Create the render pipeline

This is the final step in the process and is where we create the render pipeline. We use the

create_render_pipeline function to do this.

It has a lot of parameters

create_render_pipeline(**parameters)

Create a GPURenderPipeline object.

Parameters:

- label (str) – A human-readable label. Optional.

- layout (GPUPipelineLayout) – The layout for the new pipeline.

- vertex (structs.VertexState) – Describes the vertex shader entry point of the pipeline and its input buffer layouts.

- primitive (structs.PrimitiveState) – Describes the primitive-related properties of the pipeline. If strip_index_format is present (which means the primitive topology is a strip), and the drawCall is indexed, the vertex index list is split into sub-lists using the maximum value of this index format as a separator. Example: a list with values [1, 2, 65535, 4, 5, 6] of type “uint16” will be split in sub-lists [1, 2] and [4, 5, 6].

- depth_stencil (structs.DepthStencilState) – Describes the optional depth-stencil properties, including the testing, operations, and bias. Optional.

- multisample (structs.MultisampleState) – Describes the multi-sampling properties of the pipeline.

- fragment (structs.FragmentState) – Describes the fragment shader entry point of the pipeline and its output colors. If it’s None, the No-Color-Output mode is enabled: the pipeline does not produce any color attachment outputs. It still performs rasterization and produces depth values based on the vertex position output. The depth testing and stencil operations can still be used.

These parameters describe the various stages of the pipeline and how they are connected. This is one of the biggest departures from OpenGL where the pipeline is defined by the API and the user just sets the state. Again more on this in a later post.

The follow code is used to create the render pipeline.

return device.create_render_pipeline(

layout=pipeline_layout,

vertex={

"module": vert_shader,

"entry_point": "main",

},

primitive={

"topology": wgpu.PrimitiveTopology.triangle_list,

"front_face": wgpu.FrontFace.ccw,

"cull_mode": wgpu.CullMode.none,

},

depth_stencil=None,

multisample=None,

fragment={

"module": frag_shader,

"entry_point": "main",

"targets": [

{

"format": render_texture_format,

"blend": {

"color": {},

"alpha": {},

},

},

],

},

)

The shader entries are passed in as a dictionary with the module and the entry point. The vertex shader is passed in as the vert_shader and the fragment shader as the frag_shader. In particular the entry point is the main function in the shader, which in this case is called main as this is the glsl convention, if we use WebSL we can have a single file with multiple entry points.

The primitive is defined as a triangle list with no culling, this is again an important departure from OpenGL where the draw call specifies the primitive type, and the current state the culling and the winding order.

As we will see mixing lines, triangles and the other primitives will require multiple pipelines.

Finally some rendering

The final part of the code is the draw function that is returned from the setup_drawing_sync function. This is a simple function that just binds the render pipeline and then draws the triangle.

def get_draw_function(canvas, device, render_pipeline):

def draw_frame():

current_texture = canvas.get_context("wgpu").get_current_texture()

command_encoder = device.create_command_encoder()

render_pass = command_encoder.begin_render_pass(

color_attachments=[

{

"view": current_texture.create_view(),

"resolve_target": None,

"clear_value": (0, 0, 0, 1),

"load_op": wgpu.LoadOp.clear,

"store_op": wgpu.StoreOp.store,

}

],

)

render_pass.set_pipeline(render_pipeline)

# render_pass.set_bind_group(0, no_bind_group)

render_pass.draw(3, 1, 0, 0)

render_pass.end()

device.queue.submit([command_encoder.finish()])

return draw_frame

Again the way modern API’s work is to generate a series of commands that are then submitted to the GPU. This is a very different way of working to OpenGL where the commands are executed immediately.

The basic process is

1️. Create a Command Encoder – Starts recording GPU commands.

2️. Record Commands – Add render passes, compute passes, or copy operations.

3️. Finish Encoding – Convert the recorded commands into a command buffer.

4️. Submit the Command Buffer – Send it to the GPU for execution.

When we create the render pass we add a color_attachments to specify the current texture as the target, the clear colour, and the load and store operations. This is taken from the current texture that is associated with the canvas.

Next the pipeline is set, this is basically all the data the GPU needs to render the triangle.

Finally the draw call is made to render the triangle, this is very similar to the OpenGL glDrawArrays call.

draw(vertex_count: int, instance_count: int = 1, first_vertex: int = 0, first_instance: int = 0)

Run the render pipeline without an index buffer.

Parameters:

- vertex_count (int) – The number of vertices to draw.

- instance_count (int) – The number of instances to draw. Default 1.

- first_vertex (int) – The vertex offset. Default 0.

- first_instance (int) – The instance offset. Default 0.

Once all the draw calls are made the command encoder is finished and the commands are submitted to the GPU which will then render the triangle to the screen.

There is still quite a lot of this being done behind the scenes by the wgpu library, but this is a good starting point to understand how the rendering pipeline is setup and how the draw calls are made and in particular what parts of the pipeline are needed and how they are connected.

Potential Learning Outcomes

From this simple example there is a lot to unpack, in particular the following learning outcomes need to be considered.

- Where do we render too?

- How do we setup the rendering pipeline?

- What are the stages of the rendering pipeline?

- What is a shader?

- What is a bind group?

- What is a render pass?

- What is a command encoder?

- What is a command buffer?

- What is a pipeline layout?

These are all key concepts that need to be understood to be able to develop a WebGPU application.

What’s Next?

Personally I didn’t like the style of the Windowing API and how it was setup, so I decided to dig a little deeper into the library to see how they implemented the Qt side of things. The next post will cover how the library is structured and how the Qt side of things is implemented.